By Victoria Zhanqi Zhang and Carlos Ponce

When we look at the world, our brains do not just see objects; they extract meaningful features from complex visual scenes. How does the brain make sense of the rich, complex images we encounter every day?

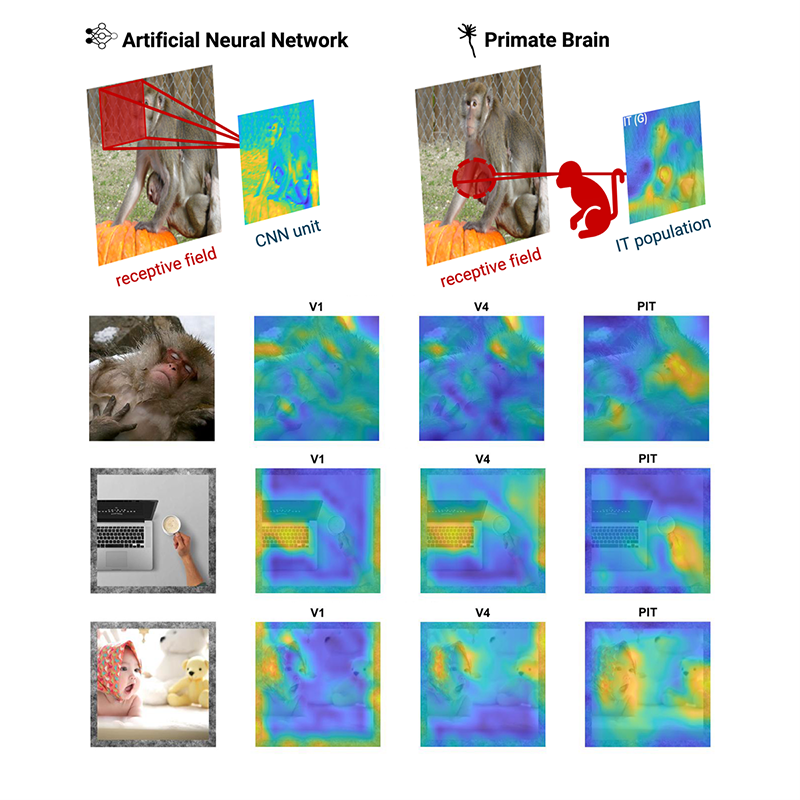

In this new study, we explored how neurons in the primate visual system encode such information using brain feature maps. Brain feature maps are heatmaps that reveal which parts of an image neurons respond to most.

More specifically, a brain feature map shows how groups of neurons respond to different parts of an image, by recording neural activity within their receptive fields, which are the specific visual-field regions where stimuli affect neural firing. By shifting images across these fixed receptive fields and measuring the responses, we generated heatmaps that reveal which visual features each brain area is most sensitive to.

These patterns emerge without any supervision from the investigator, with no labels or biases, so they offer a more accurate view of how the brain naturally processes the world. Instead of imposing our assumptions about which objects are important, we allow the neurons to reveal what they care about.

The brain does not rely on a single region to process visual information: rather, it contains a hierarchy of interconnected areas, each responsible for different levels of visual abstraction. We recorded the activities of neurons across several of these areas, including V1, V4, and posterior inferotemporal cortex (PIT), in response to a selection of different natural scene images to generate a brain feature map corresponding to each image, for each distinct brain area.

What emerged was striking. Across the hierarchy, neurons progressively focused their responses not on arbitrary “objects,” but on ethologically relevant features such as faces, hands, eyes, and other animal parts. In fact, while neurons responded across the full image, their natural inclination was toward animal features. This trend held even in V1, and became sharper in V4 and PIT, possibly reflecting an evolutionarily tuned sensitivity to social signals–a kind of neural intuition, forged by the demands of evolution and real-world survival.

Click image to see animated version.

Top: Comparison between feature representations from an artificial neural network, e.g., a convolutional neural network (CNN) (left) and brain feature maps from the inferotemporal cortex (IT) in brain (right).

Bottom: Example brain feature maps, population-level heatmaps of neuronal activity in response to natural scene images (column 1). Heatmaps correspond to three different visual cortical areas in one of the monkeys we studied: V1/V2 (column 2), V4 (column 3), and PIT (posterior inferotemporal cortex, column 4). These heatmaps reveal increasing response to animal features, such as face and hands, instead of the whole objects, from V1 to PIT.

To test whether these responses reflect something uniquely biological, we compared the brain’s heatmaps to those produced by artificial vision systems, such as deep neural networks. We found that very few artificial networks, even those trained on massive datasets, replicated this animal-centric focus.

Together, these results reveal that the primate visual system is tuned not just merely for object recognition, but for detecting the specific, ethologically meaningful features most relevant to behavior. The brain feature maps we generated provide a new window into how vision is organized in the brain and offer valuable guidance for designing artificial systems that most closely mirror the way biological vision works.

Victoria Zhanqi Zhang is a Ph.D. student in Computer Science at UC San Diego in Mishne and Aoi Lab. This study was performed in Ponce Lab during her B.S.

Learn more in the original research paper:

Brain feature maps reveal progressive animal-feature representations in the ventral stream.

Zhang Z, Hartmann TS, Born RT, Livingstone MS, Ponce CR. Sci Adv. 2025 Apr 25;11(17):eadq7342. doi: 10.1126/sciadv.adq7342. Epub 2025 Apr 25

News Types: Community Stories