by Aleena Garner

Teamwork makes sensory streams work: Our senses work together, learn from each other, and stand in for one another—the result of which is perception and understanding. We integrate auditory and visual information, as well as past experience, to process what we hear and see.

The article “Hearing lips and seeing voices” (McGurk and Macdonald, 1976) demonstrated how profoundly learned associations and expectation between auditory and visual information alter sensory perception. However, over 40 years later, it remained unknown how neurons in the brain learn to use one sense, such as hearing, to assist or change processing in another sense, such as vision. In our study, we describe a mechanism by which early auditory and visual cortex directly interact through long-range connections over the course of learning to create a specific association between an auditory and a visual stimulus that functions to make cross-modal predictions in behaviorally-relevant situations.

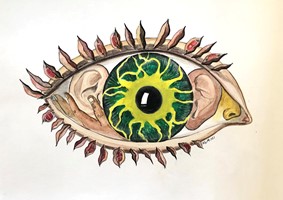

Seeing is more than just vision. Artwork is watercolor painting by Nancy L. Murray.

We let mice explore a virtual room in which we occasionally played a sound, let’s call it sound A, and followed the sound with an image, let’s call it image A. The mice learned to expect to see image A whenever they heard sound A, and over the course of learning, their visual cortex responded progressively less to image A, but only when it came after sound A. When we showed mice image A in probe trials without the preceding sound A or following a different sound that had not been paired with image A, sound B, visual cortex responses were much larger (similar in size to those in untrained mice).

Interestingly, sound A and image A needed to be meaningful to the mice. We found strong, consistent suppression of visual responses during the sound A-image A pairing when mice received a reward following the stimuli but not when the same stimuli were passively heard and seen. Our theory is that the decrease in neural activity in response to image A when it is expected (because it predictably follows sound A) is how the brain can spend fewer resources processing information with which it is already familiar and amplify unpredictable information, which allows us to learn and figure out how to integrate new or surprising information into our view of how the world works. We are constantly bombarded with sensory information all day, but we don’t remember everything. However, we do learn and update what we think of the world when sensory events are meaningful to us.

We also wanted to understand what was causing the suppression of responses in visual cortex to images that could be predicted using sounds. There is a large neural projection from auditory cortex to visual cortex in primates and rodents, but scientists have a very limited understanding as to what the computational role is of this pathway. Delving into the unknown, we synthetically stimulated direct input from auditory cortex to visual cortex using a technique we termed ‘FMI’ for Functional Mapping of Influence, which allowed us to tag neurons in visual cortex based on how they respond to input from auditory cortex. Using FMI, we discovered that stimulation of auditory input to visual cortex selectively suppressed the neurons most responsive to image A after, but not before, learning. Importantly, these ‘inhibited by stimulation of auditory input’-visual cortex neurons exhibited the strongest suppression of visual responses following the sound A and the strongest recovery of visual responses following sound B. Thus, the auditory to visual cortex pathway is plastic—that is, it changes with learning—and at least one of its computational roles is to tell visual cortex what to expect to see after auditory cortex has heard a sound.

Our work demonstrates, for the first time, a mechanism by which early visual cortex supplies an interpretation of visual information based upon a learned relationship with auditory information. Our results demonstrate that a change in information flow through long-range cortical connections provides a memory trace that endows early sensory cortex with a mnemonic capacity. We supply a mechanistic understanding of how predictive processing can give rise to both confusion (e.g. illusions) and understanding (e.g. accurate anticipatory behavior).

These findings not only expand our understanding of basic mechanisms of multi-sensory interactions and associative memory storage, but also provide fundamental insight into the neural basis of multi-sensory disorders such as dyslexia, SDD (sensory discrimination disorder), and SMD (sensory modulation disorder). Importantly, our results reveal neural pathways capable of plasticity and thus malleable for therapeutic interventions for such disorders.

Aleena Garner is a Member of the Faculty in the Department of Neurobiology at Harvard Medical School.

Learn more in the original research article:

A cortical circuit for audio-visual predictions. Garner AR, Keller GB. Nat Neurosci. 2021 Dec 2. doi: 10.1038/s41593-021-00974-7. Epub ahead of print. PMID: 34857950.

News Types: Community Stories