I study how our brain transforms the light that hits our eyes into the rich and detailed images we use to understand the visual world around us. My research focuses on how different parts of the brain work together to recognize objects and their relationships in a scene, using techniques from psychology, computer science and neuroscience.

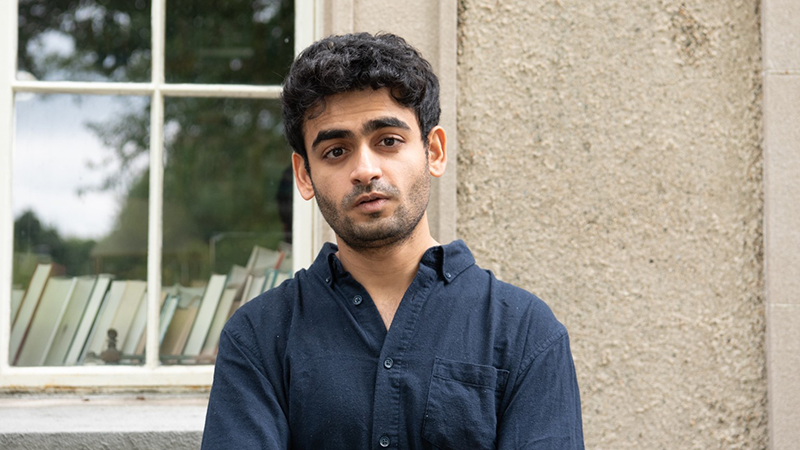

Photo by Celia Muto

What are the big questions driving your research?

Have you wondered how we can look at a jumble of colors, textures, and shapes and instantly recognize a friend’s face or a familiar object? My research delves into this marvel of vision—how our brain transforms the sensory information that hits our eyes into a vivid and meaningful depiction of the world around us, enabling us to make sense of our surroundings, navigate through them, and interact effectively. More specifically, I explore how the brain identifies, computes, and pieces together visual information to arrive at an early representation of an object, which lays the foundation for our intricate visual perceptions. Another facet of my research seeks to understand how we group smaller chunks of visual information to form holistic shapes and objects- drawing comparisons between human and artificial vision, paving the way to decipher the intricate code we and our artificial counterparts use to interpret the world visually.

To this end, I am exploring the tuning and spatial topographies of proto-/early-object representations in the human brain and mind, the different perceptual grouping mechanisms facilitating the emergence of these representations, and how these representations constrain the visual system towards configural relations between object parts . To achieve these goals, I use a multidisciplinary approach that combines computational models of vision, primarily deep neural networks, behavioral psychophysics, and neuroimaging data. By integrating these techniques, I aim to gain deeper insights into the emergent behavioral and neural signatures of mid-level computations that underlie biological vision.

What drew you to this area of neuroscience?

My interest in neuroscience and visual perception evolved naturally from my academic and research experiences. During a gap year before college, I developed a passion for computer science through game design, which led to the creation of a student-led lab and compute clusters in undergrad. My focus shifted during my third year when I visited UW-Madison. While there, I worked on computational models that could predict steel embrittlement in pressure vessels due to exposure to high-energy neutrons, sparking my interest in machine learning. An internship at Brigham and Women’s Hospital at Harvard Medical School trained me to develop vision models for medical diagnosis. A pivotal moment was attending a talk in 2018 by Jim DiCarlo, Josh Tenenbaum, and David Heeger, which highlighted how artificial vision models can enhance our understanding of biological systems. This inspired me to join the Vision Sciences Lab at Harvard, where I initially worked on intuitive physics and visual working memory in human vision. Gradually, I became fascinated by how our visual system processes and interprets object-based information. This drives my current research on proto-object representations and perceptual grouping mechanisms to uncover the neural and cognitive signatures of mid-level object vision.

What has been the most surprising thing you’ve learned in the lab or classroom so far?

The most surprising thing I’ve learned is how the brain reconstructs accurate 3D representations of the world from sparse and ambiguous visual information. This involves intricate computations and perceptual mechanisms that interpret depth, shading, motion, and other visual cues to create a coherent and accurate representation of our environment. One specific aspect that amazed me is the brain’s ability to infer depth from binocular disparity—the slight difference in images between our two eyes. A seminal study by Julesz in the 1960s introduced random-dot stereograms (2D images that are visual illusions, designed to look 3D), showing that depth perception could occur even without recognizable shapes or patterns, purely based on the disparity between two images. This demonstrated that the brain has specialized mechanisms for interpreting these tiny differences to create a coherent 3D perception, highlighting the incredible complexity and sophistication of our visual system.

What is the trait you most admire in others?

The trait I admire most in others is humility and genuine curiosity. I deeply respect people who have a relentless desire to learn and grow. This trait drives innovation and fosters a culture of continuous improvement, leading individuals to ask questions, challenge the status quo, and ultimately solve problems with accountability and integrity. These characteristics coupled with compassion and respect for others, creates a powerful foundation for meaningful contributions to any field.